In today's rapidly advancing field of artificial intelligence, the FAIR team at Meta, in collaboration with the Georgia Institute of Technology, has developed a brand-new framework called CATransformers. This framework is designed with the core concept of reducing carbon emissions, aiming to significantly decrease the carbon footprint of AI technology during operations by optimizing model architectures and hardware performance, thereby laying the foundation for sustainable AI development.

With the widespread application of machine learning technologies across various fields, from recommendation systems to autonomous driving, the computational demands behind these technologies are continuously increasing. However, the high energy consumption issue of these technologies is also becoming increasingly prominent. Traditional AI systems usually require powerful computing resources and rely on customized hardware accelerators to operate, which not only consumes large amounts of energy during training and inference stages but also leads to higher carbon emissions during operations. Additionally, the entire lifecycle of hardware, including manufacturing and disposal, also releases "embedded carbon," further exacerbating ecological burdens.

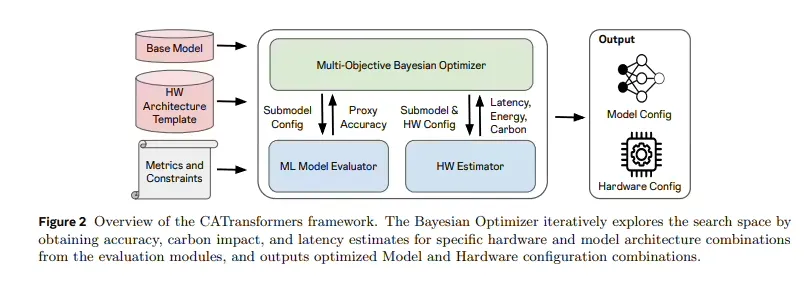

Current emission reduction strategies often focus on improving operational efficiency, such as optimizing energy consumption and increasing hardware utilization. However, this often overlooks carbon emissions during hardware design and manufacturing processes. To address this challenge, the CATransformers framework was born. It uses a multi-objective Bayesian optimization engine to comprehensively evaluate the performance of model architectures and hardware accelerators, achieving a balance between latency, energy consumption, accuracy, and total carbon footprint.

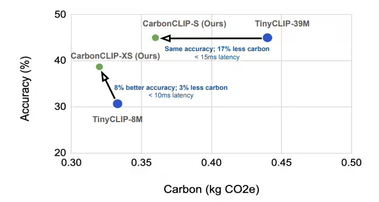

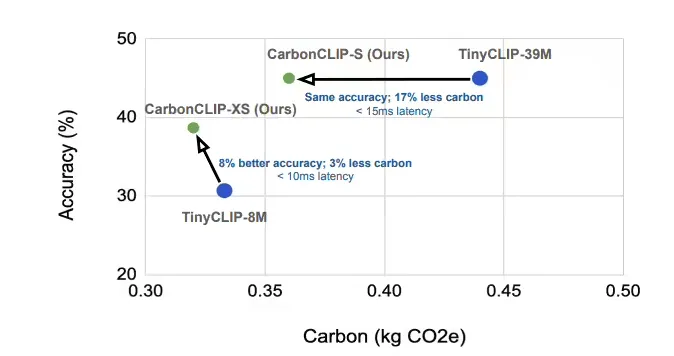

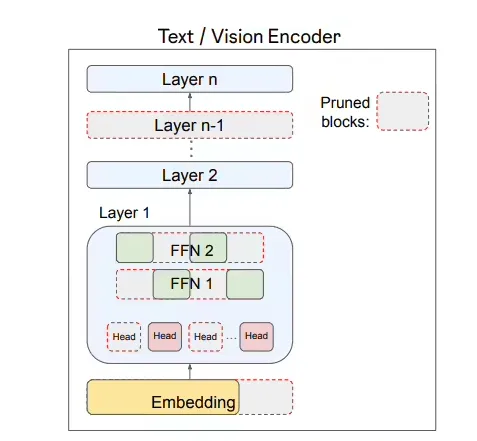

The CATransformers framework is particularly optimized for edge inference devices. By pruning a large CLIP model, it generates variants that have lower carbon emissions but excellent performance. For example, CarbonCLIP-S and TinyCLIP-39M perform comparably in terms of accuracy but reduce carbon emissions by 17%, maintaining a latency of less than 15 milliseconds. Meanwhile, CarbonCLIP-XS improves accuracy by 8% compared to TinyCLIP-8M while reducing carbon emissions by 3%, with a latency of less than 10 milliseconds.

Research findings indicate that solely optimizing latency designs may lead to hidden carbon increases of up to 2.4 times. In contrast, adopting a design strategy that considers both carbon emissions and latency can achieve a total emission reduction of 19% to 20%, with negligible latency loss. The introduction of CATransformers provides a solid foundation for the design of sustainable machine learning systems, demonstrating how an AI development model that considers hardware capabilities and carbon impact from the outset can achieve a win-win situation for performance and sustainability.

As AI technology continues to evolve, CATransformers will provide the industry with a practical path to emission reduction, helping to realize the future of green technology.