Recently, Meta's FAIR team collaborated with the Georgia Institute of Technology to develop the CATransformers framework, which aims to make carbon emissions a core consideration in the design of AI systems. This new framework significantly reduces the total carbon footprint of AI technologies by jointly optimizing model architectures and hardware performance, marking an important step toward sustainable AI development.

With the rapid popularization of machine learning technology, applications in areas such as recommendation systems and autonomous driving are increasing, but their environmental costs cannot be ignored. Many AI systems require substantial computational resources and often rely on custom hardware accelerators for operations. High energy consumption during training and inference stages directly leads to a significant increase in operational carbon emissions. Additionally, the entire lifecycle of hardware from production to disposal generates hidden carbon, further exacerbating ecological burdens.

Existing emission reduction methods mostly focus on improving operational efficiency, such as optimizing energy consumption during training and inference or increasing hardware utilization. However, these methods often overlook carbon emissions generated during the hardware design and manufacturing stages, failing to effectively integrate the mutual influence between model design and hardware efficiency.

The launch of the CATransformers framework precisely fills this gap. Through a multi-objective Bayesian optimization engine, the framework can jointly evaluate the performance of model architectures and hardware accelerators, aiming to balance latency, energy consumption, accuracy, and total carbon footprint. Especially for edge inference devices, CATransformers generates variants by pruning large CLIP models and combines them with hardware estimation tools to analyze the relationship between carbon emissions and performance.

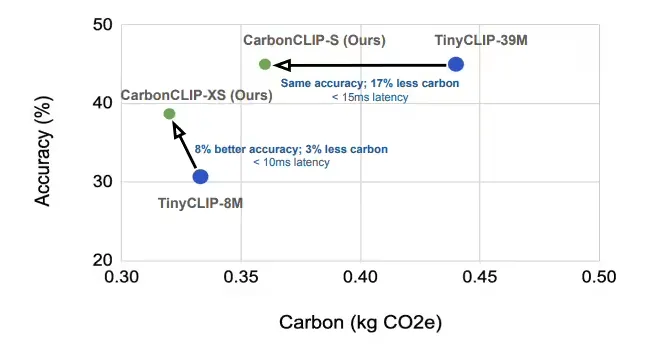

Research shows that CarbonCLIP-S and TinyCLIP-39M, two results of CATransformers, achieve comparable accuracy to other models but reduce carbon emissions by 17%, with latency controlled within 15 milliseconds. In addition, CarbonCLIP-XS improves accuracy by 8% compared to TinyCLIP-8M while reducing carbon emissions by 3%, with latency below 10 milliseconds.

It is worth noting that optimizing latency alone may result in hidden carbon increasing by up to 2.4 times. In contrast, a strategy that comprehensively optimizes both carbon emissions and latency can achieve a 19-20% total emission reduction with minimal latency loss. CATransformers lays the foundation for designing sustainable machine learning systems by embedding environmental metrics. As AI technology continues to expand, this framework provides practical emission reduction paths for the industry.

Key points:

🌱 Meta collaborates with the Georgia Institute of Technology to develop the CATransformers framework, focusing on carbon emissions in AI systems.

💡 CATransformers significantly reduces the carbon footprint of AI technology by optimizing model architectures and hardware performance.

⚡ Research shows that a comprehensive optimization strategy for carbon emissions and latency can achieve a 19-20% total emission reduction.