在AI应用爆发式增长的今天,Spring AI 1.0版本带来了革命性的可观测性功能。本文将深入探讨如何利用Spring AI + Micrometer 构建企业级AI应用监控体系,实现成本控制、性能优化和全链路追踪。

为什么Spring AI应用急需可观测性?

AI服务成本失控的痛点

在企业级AI应用中,使用DeepSeek、OpenAI、Google Gemini或Azure OpenAI等服务时,成本控制是一个严峻挑战:

• Token消耗不透明:无法精确了解每次AI调用的成本

• 费用增长失控:大规模应用中,AI服务费用可能呈指数级增长

• 性能瓶颈难定位:AI调用链路复杂,问题排查困难

• 资源使用不合理:缺乏数据支撑的优化决策

Spring AI可观测性的价值

Spring AI的可观测性功能为这些痛点提供了完美解决方案:

• ✅ 精准Token监控:实时追踪输入/输出Token消耗,精确到每次调用

• ✅ 智能成本控制:基于使用统计制定成本优化策略

• ✅ 深度性能分析:识别AI调用瓶颈,优化响应时间

• ✅ 完整链路追踪:端到端记录请求在Spring AI应用中的完整流转

实战演练:构建可观测的Spring AI翻译应用

第一步:Spring AI项目初始化

在start.spring.io[1]创建Spring Boot项目,集成Spring AI核心依赖:

Maven依赖配置(Spring AI BOM管理):

复制<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-bom</artifactId>

<version>1.0.0</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<dependencies>

<!-- Spring AI DeepSeek 集成 -->

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-deepseek</artifactId>

</dependency>

<!-- Spring Boot Web -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!-- Spring Boot Actuator 监控 -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

</dependencies>第二步:Spring AI客户端配置

主应用类配置:

复制@SpringBootApplication

publicclassSpringAiTranslationApplication {

publicstaticvoidmain(String[] args) {

SpringApplication.run(SpringAiTranslationApplication.class, args);

}

@Bean

public ChatClient chatClient(ChatClient.Builder builder) {

return builder.build();

}

}Spring AI配置文件:

复制# Spring AI 可观测性配置

management:

endpoints:

web:

exposure:

include:"*"

endpoint:

health:

show-details:always

metrics:

export:

prometheus:

enabled:true

spring:

threads:

virtual:

enabled:true

ai:

deepseek:

api-key:${DEEPSEEK_API_KEY}

chat:

options:

model:deepseek-chat

temperature: 0.8环境变量设置:

复制export DEEPSEEK_API_KEY=your-deepseek-api-key

第三步:构建Spring AI翻译服务

智能翻译控制器:

复制@RestController

@RequestMapping("/api/v1")

@RequiredArgsConstructor

@Slf4j

publicclassSpringAiTranslationController {

privatefinal ChatModel chatModel;

@PostMapping("/translate")

public TranslationResponse translate(@RequestBody TranslationRequest request) {

log.info("Spring AI翻译请求: {} -> {}", request.getSourceLanguage(), request.getTargetLanguage());

Stringprompt= String.format(

"作为专业翻译助手,请将以下%s文本翻译成%s,保持原文的语气和风格:\n%s",

request.getSourceLanguage(),

request.getTargetLanguage(),

request.getText()

);

StringtranslatedText= chatModel.call(prompt);

return TranslationResponse.builder()

.originalText(request.getText())

.translatedText(translatedText)

.sourceLanguage(request.getSourceLanguage())

.targetLanguage(request.getTargetLanguage())

.timestamp(System.currentTimeMillis())

.build();

}

}

@Data

@Builder

classTranslationRequest {

private String text;

private String sourceLanguage;

private String targetLanguage;

}

@Data

@Builder

classTranslationResponse {

private String originalText;

private String translatedText;

private String sourceLanguage;

private String targetLanguage;

private Long timestamp;

}第四步:Spring AI翻译API测试

复制curl -X POST http://localhost:8080/api/v1/translate \

-H "Content-Type: application/json" \

-d '{

"text": "Spring AI makes AI integration incredibly simple and powerful",

"sourceLanguage": "英语",

"targetLanguage": "中文"

}'

# 响应示例

{

"originalText": "Spring AI makes AI integration incredibly simple and powerful",

"translatedText": "Spring AI让AI集成变得极其简单而强大",

"sourceLanguage": "英语",

"targetLanguage": "中文",

"timestamp": 1704067200000

}Spring AI监控指标深度解析

核心指标1:Spring AI操作性能监控

指标端点:/actuator/metrics/spring.ai.chat.client.operation

复制{

"name":"spring.ai.chat.client.operation",

"description":"Spring AI ChatClient操作性能指标",

"baseUnit":"seconds",

"measurements":[

{

"statistic":"COUNT",

"value":15

},

{

"statistic":"TOTAL_TIME",

"value":8.456780293

},

{

"statistic":"MAX",

"value":2.123904083

}

],

"availableTags":[

{

"tag":"gen_ai.operation.name",

"values":["framework"]

},

{

"tag":"spring.ai.kind",

"values":["chat_client"]

}

]

}业务价值:

• 监控Spring AI翻译服务调用频次

• 分析Spring AI响应时间分布

• 识别Spring AI性能瓶颈

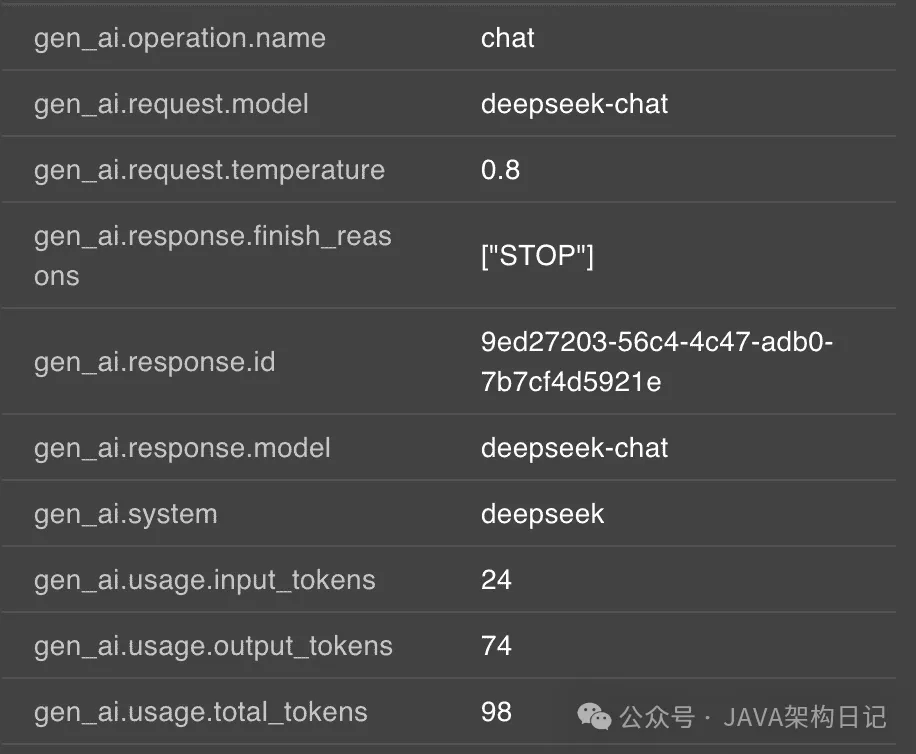

核心指标2:Spring AI Token使用量精准追踪

指标端点:/actuator/metrics/gen_ai.client.token.usage

复制{

"name":"gen_ai.client.token.usage",

"description":"Spring AI Token使用量统计",

"measurements":[

{

"statistic":"COUNT",

"value":1250

}

],

"availableTags":[

{

"tag":"gen_ai.response.model",

"values":["deepseek-chat"]

},

{

"tag":"gen_ai.request.model",

"values":["deepseek-chat"]

},

{

"tag":"gen_ai.token.type",

"values":[

"output",

"input",

"total"

]

}

]

}成本控制价值:

• 精确计算Spring AI服务成本

• 优化Prompt设计降低Token消耗

• 制定基于使用量的预算策略

Spring AI调用链路追踪实战

第一步:集成Zipkin追踪

添加Spring AI追踪依赖:

复制<dependency> <groupId>io.micrometer</groupId> <artifactId>micrometer-tracing-bridge-brave</artifactId> </dependency> <dependency> <groupId>io.zipkin.reporter2</groupId> <artifactId>zipkin-reporter-brave</artifactId> </dependency>

第二步:启动Zipkin服务

复制docker run -d \ --name zipkin-spring-ai \ -p 9411:9411 \ -e STORAGE_TYPE=mem \ openzipkin/zipkin:latest

第三步:Spring AI追踪配置

复制management:

zipkin:

tracing:

endpoint: http://localhost:9411/api/v2/spans

tracing:

sampling:

probability: 1.0Spring AI链路追踪效果展示

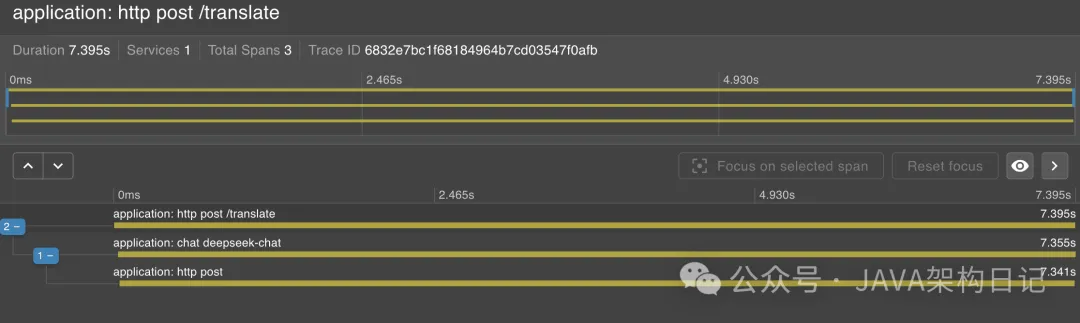

Zipkin界面展示Spring AI调用链路:

Spring AI调用链路总览

Spring AI调用链路总览

Spring AI详细调用时序:

Spring AI调用时序分析

Spring AI调用时序分析

通过Zipkin可以清晰看到:

• Spring AI ChatClient的调用耗时

• DeepSeek API的响应时间

• 完整的Spring AI请求链路

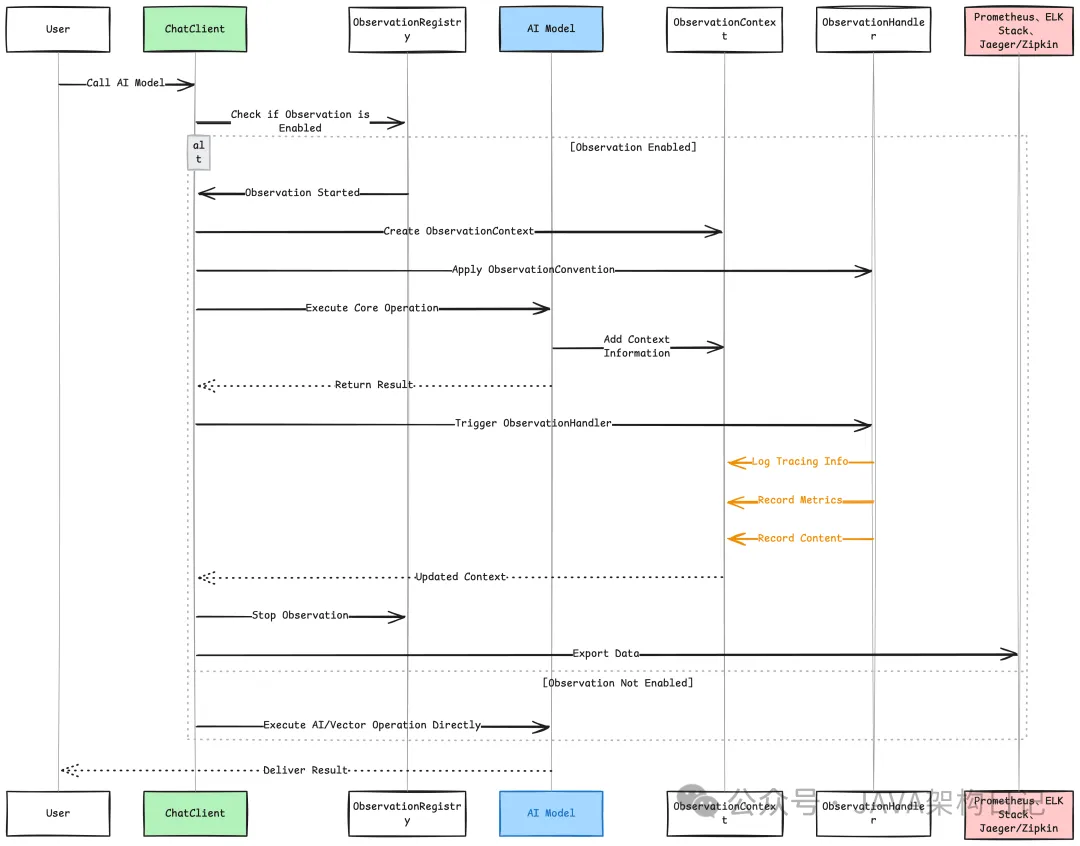

Spring AI Observations源码架构解析

Spring AI可观测性核心流程:

Spring AI Observations架构图

Spring AI Observations架构图

Spring AI的可观测性基于以下核心组件:

1. ChatClientObservationConvention:定义Spring AI观测约定

2. ChatClientObservationContext:Spring AI观测上下文

3. MicrometerObservationRegistry:指标注册中心

4. TracingObservationHandler:链路追踪处理器

引用链接

[1] start.spring.io: https://start.spring.io