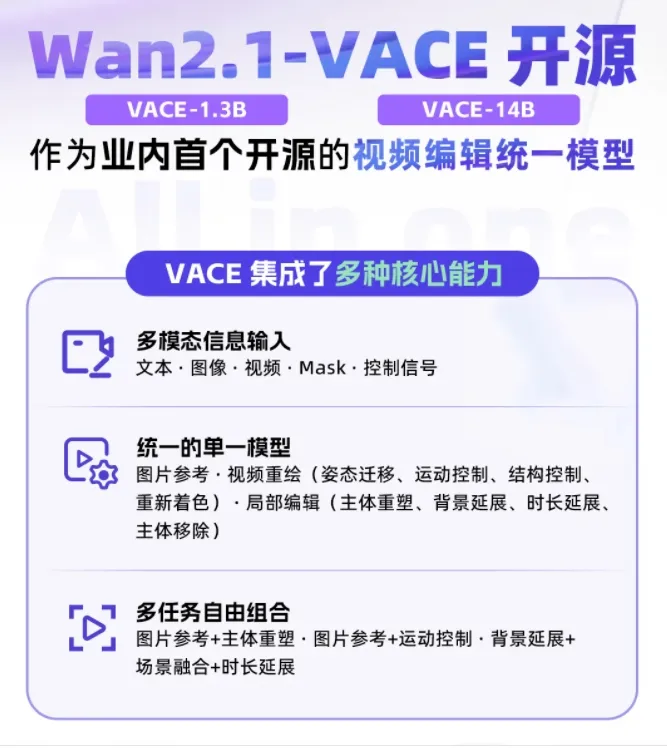

Wanxiang "Wan2.1-VACE" has been announced as open-source, marking a major technological revolution in the video editing field. The 1.3B version of Wan2.1-VACE supports 480P resolution, while the 14B version supports both 480P and 720P resolutions. The emergence of VACE brings users a one-stop video creation experience, allowing them to complete various tasks such as text-to-video generation, image reference generation, local editing, and video extension without frequently switching between different models or tools, greatly improving their creative efficiency and flexibility.

The strength of VACE lies in its controllable redraw capability, which can generate based on human pose, motion flow, structure preservation, spatial movement, and coloring controls. It also supports video generation based on subject and background references. This makes operations such as adjusting character posture, action trajectories, or scene layouts much easier after video generation is completed. The core technology behind VACE is its multimodal input mechanism, which builds a unified input system that combines text, images, videos, masks, and control signals. For image inputs, VACE can support object reference images or video frames; for video inputs, users can use VACE to regenerate by erasing or locally expanding; for specific regions, users can specify editing areas with binary 0/1 signals; for control signals, VACE supports depth maps, optical flows, layouts, grayscale, line drawings, and poses.

VACE not only allows for content replacement, addition, or deletion in specified video regions but also completes the entire video duration based on any segment or initial/final frames in the time dimension. In the spatial dimension, it supports extending and generating edge or background regions of the screen, such as replacing backgrounds — retaining the subject unchanged while replacing the background environment according to prompts. Thanks to its powerful multimodal input module and Wan2.1's generative capabilities, VACE can easily handle functions traditionally achieved by expert models, including image reference capabilities, video redrawing abilities, and local editing capabilities. Additionally, VACE supports the free combination of various single-task capabilities, breaking through the collaborative bottleneck of traditional expert models working independently. As a unified model, it naturally integrates atomic capabilities such as text-to-video generation, pose control, background replacement, and local editing without needing to train new models for individual functionalities.

VACE’s flexible combination mechanism not only significantly simplifies the creative process but also greatly expands the creative boundaries of AI video generation. For example, combining picture references with subject reshaping can replace objects in videos; combining motion control with first-frame references can control static image postures; combining picture references, first-frame references, background expansion, and duration extension can convert vertical images into horizontal videos while adding elements from reference images. By analyzing and summarizing the input forms of four common tasks (text-to-video, image-to-video, video-to-video, and partial video generation), VACE proposes a flexible and unified input paradigm — Video Condition Unit (VCU). VCU summarizes multi-modal context inputs into three forms: text, frame sequences, and mask sequences, unifying the input formats of four types of video generation and editing tasks. The frame sequences and mask sequences of VCU can be mathematically stacked, creating conditions for free task combinations.

In terms of technical implementation, one of the major challenges VACE faces is how to uniformly encode multimodal inputs into token sequences that diffusion Transformers can process. VACE conceptually decouples the Frame sequence in the VCU input, dividing it into RGB pixels (unchanged frame sequences) that need to be preserved intact and contents that need to be regenerated according to prompts (changeable frame sequences). Then, these three types of inputs (changeable frames, unchanged frames, and masks) are encoded in latent space. Changeable frames and unchanged frames are encoded into the same space as the DiT model noise dimensions using a VAE, with a channel number of 16; while the mask sequence is mapped to a latent space feature with consistent spatiotemporal dimensions and a channel number of 64 through deformation and sampling operations. Finally, the latent space features of the Frame sequence and mask sequence are combined and mapped through trainable parameters into DiT’s token sequence.

In training strategies, VACE compared two approaches: global fine-tuning and context adapter fine-tuning. Global fine-tuning achieves faster inference speed by training all DiT parameters, while context adapter fine-tuning fixes the original base model parameters and selectively copies and trains some of the original Transformer layers as additional adapters. Experiments show that there is little difference in validation loss between the two methods, but context adapter fine-tuning converges faster and avoids the risk of losing basic capabilities. Therefore, this open-source version used the context adapter fine-tuning method for training. Through quantitative evaluations of the released VACE series models, it can be seen that the model shows significant improvements over the 1.3B preview version on multiple key metrics.

- GitHub: https://github.com/Wan-Video/Wan2.1

- ModelScope: https://modelscope.cn/organization/Wan-AI

- Hugging Face: https://huggingface.co/Wan-AI

- Domestic Site: https://tongyi.aliyun.com/wanxiang/

- International Site: https://wan.video