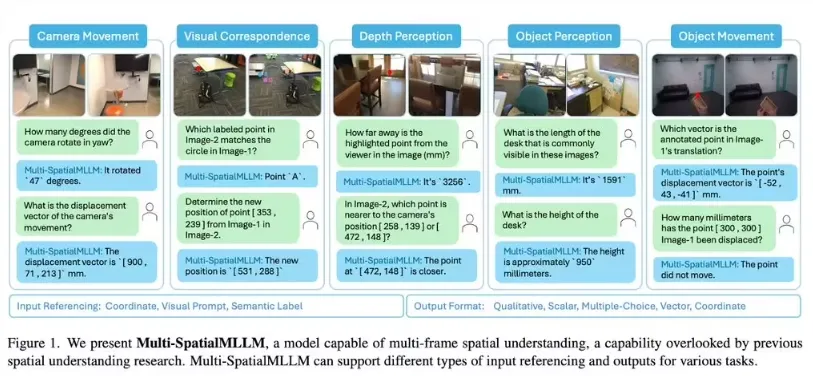

科技巨头 Meta 与香港中文大学的研究团队联合推出了 Multi-SpatialMLLM 模型,这一新框架在多模态大语言模型(MLLMs)的发展中取得了显著进展,尤其是在空间理解方面。该模型通过整合深度感知、视觉对应和动态感知三大组件,突破了以往单帧图像分析的限制,为更复杂的视觉任务提供了强有力的支持。

近年来,随着机器人和自动驾驶等领域对空间理解能力的需求不断增长,现有的 MLLMs 面临着诸多挑战。研究发现,现有模型在基础空间推理任务中表现不佳,例如,无法准确区分左右方向。这一现象主要源于缺乏专门的训练数据,且传统的方法往往只能基于静态视角进行分析,缺少对动态信息的处理。

为了解决这一问题,Meta 的 FAIR 团队与香港中文大学共同推出了 MultiSPA 数据集。该数据集覆盖了超过2700万个样本,涵盖多样化的3D 和4D 场景,结合了 Aria Digital Twin 和 Panoptic Studio 等高质量标注数据,并通过 GPT-4o 生成了多种任务模板。

此外,研究团队设计了五个训练任务,包括深度感知、相机移动感知和物体大小感知等,以此来提升 Multi-SpatialMLLM 在多帧空间推理上的能力。经过一系列测试,Multi-SpatialMLLM 在 MultiSPA 基准测试中的表现十分优异,平均提升了36%,在定性任务中的准确率也达到了80-90%,显著超越了基础模型的50%。尤其是在预测相机移动向量等高难度任务上,该模型也取得了18% 的准确率。

在 BLINK 基准测试中,Multi-SpatialMLLM 的准确率接近90%,平均提升了26.4%,超越了多个专有系统。而在标准视觉问答(VQA)测试中,该模型也保持了其原有的性能,显示了其在不依赖过度拟合空间推理任务的情况下,依然具有良好的通用能力。

划重点:

🌟 Meta 推出的 Multi-SpatialMLLM 模型显著提升了多模态大语言模型的空间理解能力。

📊 新模型通过整合深度感知、视觉对应和动态感知三大组件,克服了单帧图像分析的局限。

🏆 Multi-SpatialMLLM 在多项基准测试中表现优秀,准确率大幅提升,超越传统模型。